In this article we’ll discuss how you can block unwanted users or bots from accessing your website via .htaccess rules. The .htaccess file is a hidden file on the server that can be used to control access to your website among other features.

Following the steps below we’ll walk through several different ways in which you can block unwanted users from being able to access your website.

- Edit your .htaccess file

- Block by IP address

- Block bad users based on their User-Agent string

- Block by referer

- Temporarily block bad bots

Edit your .htaccess file

To use any of the forms of blocking an unwanted user from your website, you’ll need to edit your .htaccess file.

- Login to your cPanel.

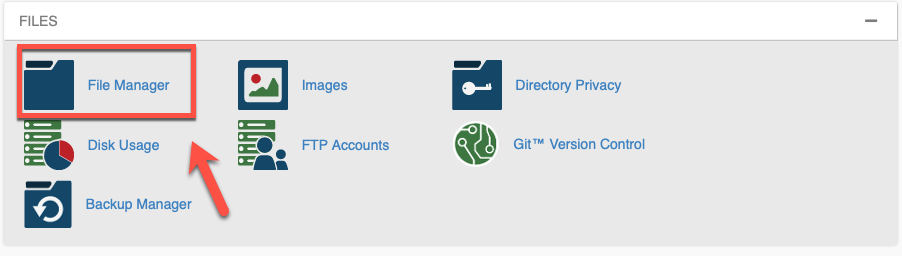

- Under Files, click on File Manager.

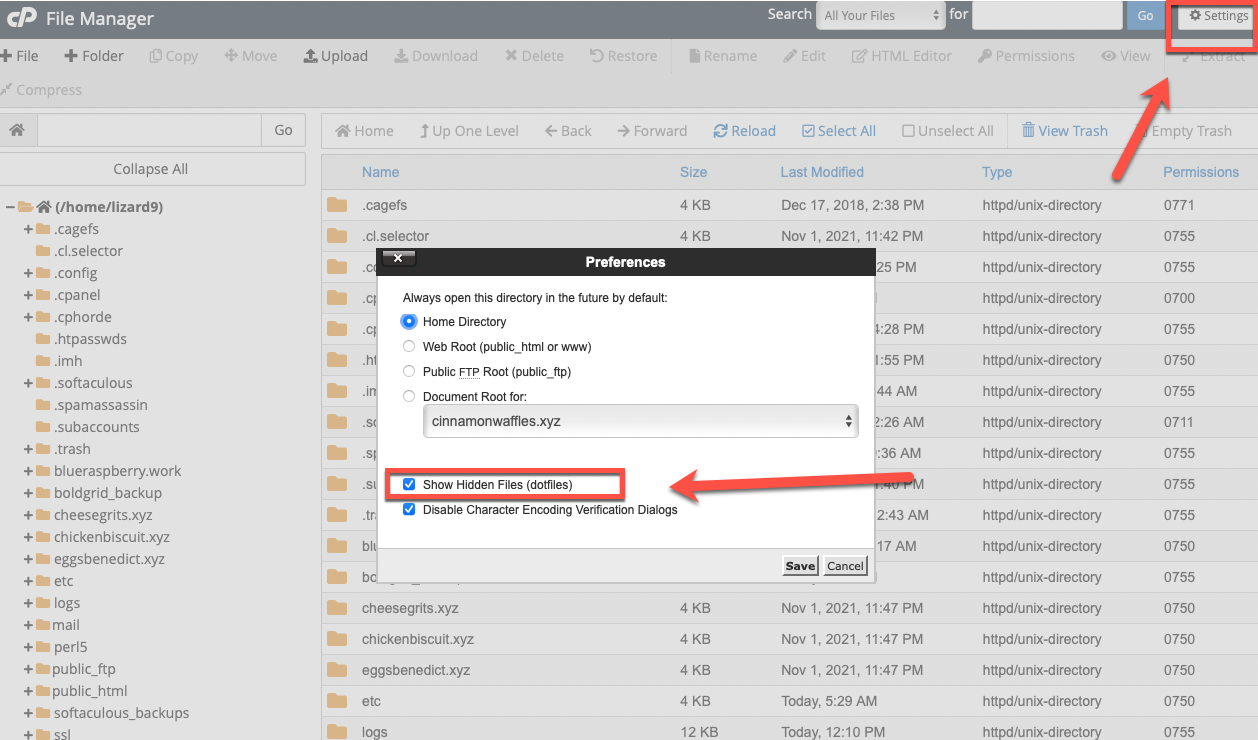

- Click on Settings in the upper-right. Be sure that Show Hidden Files (dotfiles) is checked. Click Save.

- Select the directory for the site you want to edit. Please note that if the site is your primary domain, you will select public_html.

- If your site is missing an .htaccess file, click on + File at the top-left and name the file .htaccess. Be sure the listed directory matches the site you are working on and click Create New File.

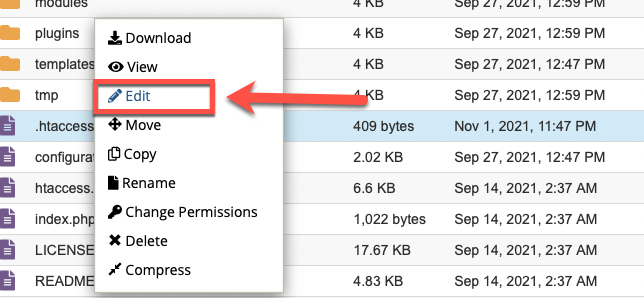

- If your .htaccess file already exists, or you’ve finished creating one, right-click on the .htaccess file and select Edit.

- You might have a text editor encoding dialog box pop-up, you can simply click on Edit.

Block by IP address

You might have one particular IP address, or multiple IP addresses that are causing a problem on your website. In this event, you can simply outright block these problematic IP addresses from accessing your site.

Block a single IP address

If you just need to block a single IP address, or multiple IPs not in the same range, you can do so with this rule:

deny from 123.123.123.123

Block a range of IP addresses

To block an IP range, such as 123.123.123.1 – 123.123.123.255, you can leave off the last octet:

deny from 123.123.123

You can also use CIDR (Classless Inter-Domain Routing) notation for blocking IPs:

To block the range 123.123.123.1 – 123.123.123.255, use 123.123.123.0/24

To block the range 123.123.64.1 – 123.123.127.255, use 123.123.123.0/18

deny from 123.123.123.0/24

Block bad users based on their User-Agent string

Some malicious users will send requests from different IP addresses, but still using the same User-Agent for sending all of the requests. In these events you can also block users by their User-Agent strings.

Block a single bad User-Agent

If you just wanted to block one particular User-Agent string, you could use this RewriteRule:

RewriteEngine On RewriteCond %{HTTP_USER_AGENT} Baiduspider [NC] RewriteRule .* - [F,L]

Alternatively, you can also use the BrowserMatchNoCase Apache directive like this:

BrowserMatchNoCase "Baiduspider" bots Order Allow,Deny Allow from ALL Deny from env=bots

Block multiple bad User-Agents

If you wanted to block multiple User-Agent strings at once, you could do it like this:

RewriteEngine On RewriteCond %{HTTP_USER_AGENT} ^.*(Baiduspider|HTTrack|Yandex).*$ [NC] RewriteRule .* - [F,L]

Or you can also use the BrowserMatchNoCase directive like this:

BrowserMatchNoCase "Baiduspider" bots BrowserMatchNoCase "HTTrack" bots BrowserMatchNoCase "Yandex" bots Order Allow,Deny Allow from ALL Deny from env=bots

Block by referer

Block a single bad referer

If you just wanted to block a single bad referer like example.com you could use this RewriteRule:

RewriteEngine On RewriteCond %{HTTP_REFERER} example.com [NC] RewriteRule .* - [F]

Alternatively, you could also use the SetEnvIfNoCase Apache directive like this:

SetEnvIfNoCase Referer "example.com" bad_referer Order Allow,Deny Allow from ALL Deny from env=bad_referer

Block multiple bad referers

If you just wanted to block multiple referers like example.com and example.net you could use:

RewriteEngine On RewriteCond %{HTTP_REFERER} example.com [NC,OR] RewriteCond %{HTTP_REFERER} example.net [NC]RewriteRule .* - [F]

Or you can also use the SetEnvIfNoCase Apache directive like this:

SetEnvIfNoCase Referer "example.com" bad_referer SetEnvIfNoCase Referer "example.net" bad_referer Order Allow,Deny Allow from ALL Deny from env=bad_referer

Temporarily block bad bots

In some cases you might not want to send a 403 response to a visitor which is just a access denied message. A good example of this is lets say your site is getting a large spike in traffic for the day from a promotion you’re running, and you don’t want some good search engine bots like Google or Yahoo to come along and start to index your site during that same time that you might already be stressing the server with your extra traffic.

The following code will setup a basic error document page for a 503 response, this is the default way to tell a search engine that their request is temporarily blocked and they should try back at a later time. This is different then denying them access temporarily via a 403 response, as with a 503 response Google has confirmed they will come back and try to index the page again instead of dropping it from their index.

The following code will grab any requests from user-agents that have the words bot, crawl, or spider in them which most of the major search engines will match for. The 2nd RewriteCond line allows these bots to still request a robots.txt file to check for new rules, but any other requests will simply get a 503 response with the message “Site temporarily disabled for crawling”.

Typically you don’t want to leave a 503 block in place for longer than 2 days. Otherwise Google might start to interpret this as an extended server outage and could begin to remove your URLs from their index.

ErrorDocument 503 "Site temporarily disabled for crawling" RewriteEngine On RewriteCond %{HTTP_USER_AGENT} ^.*(bot|crawl|spider).*$ [NC] RewriteCond %{REQUEST_URI} !^/robots.txt$ RewriteRule .* - [R=503,L]

This method is good to use if you notice some new bots crawling your site causing excessive requests and you want to block them or slow them down via your robots.txt file. As it will let you 503 their requests until they read your new robots.txt rules and start obeying them. You can read about how to stop search engines from crawling your website for more information regarding this.

You should now understand how to use a .htaccess file to help block access to your website in multiple ways.

Hi,

I have write in my .htaccess :

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(AhrefsBot|Ahrefs).*$ [NC]

RewriteRule .* – [F,L]

and also in my robots.txt :

Crawl-delay: 30

User-agent: AhrefsBot

Disallow: /

But when I check my logs I still have 5 Times every minutes :

Mozilla/5.0 (compatible; AhrefsBot/6.1; +https://ahrefs.com/robot/)

Someone can help me please?

No it’s not a coding issue… as you can see from my post they’re hitting files that doesn’t exist (404’ed)

This is a very aggressive vulnerability scan bot, that searches for accessible scripts to inject.

Log example:

Line 16278: 47.52.167.174 – – [30/Aug/2018:10:06:22 +0200] “GET /index.php HTTP/1.1” 404 496 “-” “Mozilla/5.0”

Line 16280: 47.52.167.174 – – [30/Aug/2018:10:06:23 +0200] “GET /phpMyAdmin/index.php HTTP/1.1” 404 507 “-” “Mozilla/5.0”

Line 16282: 47.52.167.174 – – [30/Aug/2018:10:06:23 +0200] “GET /pmd/index.php HTTP/1.1” 404 500 “-” “Mozilla/5.0”

Line 16286: 47.52.167.174 – – [30/Aug/2018:10:06:25 +0200] “GET /pma/index.php HTTP/1.1” 404 500 “-” “Mozilla/5.0”

Line 16287: 47.52.167.174 – – [30/Aug/2018:10:06:25 +0200] “GET /PMA/index.php HTTP/1.1” 404 500 “-” “Mozilla/5.0”

Line 16288: 47.52.167.174 – – [30/Aug/2018:10:06:25 +0200] “GET /PMA2/index.php HTTP/1.1” 404 501 “-” “Mozilla/5.0”

Line 16289: 47.52.167.174 – – [30/Aug/2018:10:06:26 +0200] “GET /pmamy/index.php HTTP/1.1” 404 502 “-” “Mozilla/5.0”

Line 16290: 47.52.167.174 – – [30/Aug/2018:10:06:26 +0200] “GET /pmamy2/index.php HTTP/1.1” 404 503 “-” “Mozilla/5.0”

Line 16293: 47.52.167.174 – – [30/Aug/2018:10:06:26 +0200] “GET /mysql/index.php HTTP/1.1” 404 502 “-” “Mozilla/5.0”

Line 16294: 47.52.167.174 – – [30/Aug/2018:10:06:27 +0200] “GET /admin/index.php HTTP/1.1” 404 502 “-” “Mozilla/5.0”

Line 16295: 47.52.167.174 – – [30/Aug/2018:10:06:27 +0200] “GET /db/index.php HTTP/1.1” 404 499 “-” “Mozilla/5.0”

Line 16297: 47.52.167.174 – – [30/Aug/2018:10:06:29 +0200] “GET /dbadmin/index.php HTTP/1.1” 404 504 “-” “Mozilla/5.0”

Line 16298: 47.52.167.174 – – [30/Aug/2018:10:06:29 +0200] “GET /web/phpMyAdmin/index.php HTTP/1.1” 404 511 “-” “Mozilla/5.0”

Line 16299: 47.52.167.174 – – [30/Aug/2018:10:06:29 +0200] “GET /admin/pma/index.php HTTP/1.1” 404 506 “-” “Mozilla/5.0”

Line 16300: 47.52.167.174 – – [30/Aug/2018:10:06:30 +0200] “GET /admin/PMA/index.php HTTP/1.1” 404 506 “-” “Mozilla/5.0”

Line 16301: 47.52.167.174 – – [30/Aug/2018:10:06:30 +0200] “GET /admin/mysql/index.php HTTP/1.1” 404 508 “-” “Mozilla/5.0”

Line 16303: 47.52.167.174 – – [30/Aug/2018:10:06:30 +0200] “GET /admin/mysql2/index.php HTTP/1.1” 404 509 “-” “Mozilla/5.0”

Line 16305: 47.52.167.174 – – [30/Aug/2018:10:06:31 +0200] “GET /admin/phpmyadmin/index.php HTTP/1.1” 404 513 “-” “Mozilla/5.0”

Line 16306: 47.52.167.174 – – [30/Aug/2018:10:06:31 +0200] “GET /admin/phpMyAdmin/index.php HTTP/1.1” 404 513 “-” “Mozilla/5.0”

Line 16307: 47.52.167.174 – – [30/Aug/2018:10:06:31 +0200] “GET /admin/phpmyadmin2/index.php HTTP/1.1” 404 514 “-” “Mozilla/5.0”

Line 16309: 47.52.167.174 – – [30/Aug/2018:10:06:32 +0200] “GET /mysqladmin/index.php HTTP/1.1” 404 507 “-” “Mozilla/5.0”

Line 16312: 47.52.167.174 – – [30/Aug/2018:10:06:33 +0200] “GET /mysql-admin/index.php HTTP/1.1” 404 508 “-” “Mozilla/5.0”

Line 16314: 47.52.167.174 – – [30/Aug/2018:10:06:33 +0200] “GET /phpadmin/index.php HTTP/1.1” 404 505 “-” “Mozilla/5.0”

Line 16315: 47.52.167.174 – – [30/Aug/2018:10:06:33 +0200] “GET /phpmyadmin0/index.php HTTP/1.1” 404 508 “-” “Mozilla/5.0”

Line 16316: 47.52.167.174 – – [30/Aug/2018:10:06:34 +0200] “GET /phpmyadmin1/index.php HTTP/1.1” 404 508 “-” “Mozilla/5.0”

Line 16318: 47.52.167.174 – – [30/Aug/2018:10:06:34 +0200] “GET /phpmyadmin2/index.php HTTP/1.1” 404 508 “-” “Mozilla/5.0”

Line 16320: 47.52.167.174 – – [30/Aug/2018:10:06:34 +0200] “GET /myadmin/index.php HTTP/1.1” 404 504 “-” “Mozilla/5.0”

Line 16322: 47.52.167.174 – – [30/Aug/2018:10:06:35 +0200] “GET /myadmin2/index.php HTTP/1.1” 404 505 “-” “Mozilla/5.0”

Line 16324: 47.52.167.174 – – [30/Aug/2018:10:06:35 +0200] “GET /xampp/phpmyadmin/index.php HTTP/1.1” 404 513 “-” “Mozilla/5.0”

Line 16326: 47.52.167.174 – – [30/Aug/2018:10:06:35 +0200] “GET /phpMyadmin_bak/index.php HTTP/1.1” 404 511 “-” “Mozilla/5.0”

Line 16330: 47.52.167.174 – – [30/Aug/2018:10:06:36 +0200] “GET /www/phpMyAdmin/index.php HTTP/1.1” 404 511 “-” “Mozilla/5.0”

Line 16332: 47.52.167.174 – – [30/Aug/2018:10:06:37 +0200] “GET /tools/phpMyAdmin/index.php HTTP/1.1” 404 513 “-” “Mozilla/5.0”

Line 16334: 47.52.167.174 – – [30/Aug/2018:10:06:37 +0200] “GET /phpmyadmin-old/index.php HTTP/1.1” 404 511 “-” “Mozilla/5.0”

Line 16337: 47.52.167.174 – – [30/Aug/2018:10:06:37 +0200] “GET /phpMyAdminold/index.php HTTP/1.1” 404 510 “-” “Mozilla/5.0”

Line 16341: 47.52.167.174 – – [30/Aug/2018:10:06:38 +0200] “GET /phpMyAdmin.old/index.php HTTP/1.1” 404 511 “-” “Mozilla/5.0”

Line 16342: 47.52.167.174 – – [30/Aug/2018:10:06:38 +0200] “GET /pma-old/index.php HTTP/1.1” 404 504 “-” “Mozilla/5.0”

Line 16343: 47.52.167.174 – – [30/Aug/2018:10:06:38 +0200] “GET /claroline/phpMyAdmin/index.php HTTP/1.1” 404 517 “-” “Mozilla/5.0”

Line 16345: 47.52.167.174 – – [30/Aug/2018:10:06:38 +0200] “GET /typo3/phpmyadmin/index.php HTTP/1.1” 404 513 “-” “Mozilla/5.0”

Line 16346: 47.52.167.174 – – [30/Aug/2018:10:06:39 +0200] “GET /phpma/index.php HTTP/1.1” 404 502 “-” “Mozilla/5.0”

Line 16347: 47.52.167.174 – – [30/Aug/2018:10:06:39 +0200] “GET /phpmyadmin/phpmyadmin/index.php HTTP/1.1” 403 529 “-” “Mozilla/5.0”

Line 16348: 47.52.167.174 – – [30/Aug/2018:10:06:39 +0200] “GET /phpMyAdmin/phpMyAdmin/index.php HTTP/1.1” 404 518 “-” “Mozilla/5.0”

So back to my question, how does one block this ?

Nearly all of them are coming from Tencent & Alibaba cloud Ip addresses, so i know i can do a ip ban, but it seems rather overkill.

Sorry for the confusion on this issue. I’ve looked through forums for any inkling of such a hackbot issue and I’m not finding anything. If it was a widespread issue then it would be redflagged and definitely in discussion.

Your log is showing that the GET statement is LOOKING for a file that don’t exist and being given the proper error – a 404. It’s not hitting a file. That is proper behavior for website. If there is a flood of requests coming from a single point, then it’s a form of brute forcing, and is typically picked up by existing server security systems. All code on the server should be secure and there are multiple ways to make sure that an injection cannot be performed. As advised earlier, you will need to make sure that any code added to the server is hardened to prevent intrusion. This can be done by an experienced developer. If you want an example of this in discussion, check out this article.

If there is truly a hackbot using Mozilla, my advice to you is to contact Mozilla or speak with a legitimate security firm like Sucuri. They can help identify if there are issues, as well as provide other solutions if you are not satisfied with what is provided through our hosting services.

The newest widespread hacktool is simply using “Mozilla/5.0” as user agent, how would you propose to deal with this ? Blocking Mozilla/5.0 or Mozilla/5\.0 will block 99% of all legit browsers out there, so that’s not an option.

POST /wuwu11.php HTTP/1.1″ 404 468 “-” “Mozilla/5.0”

POST /xw1.php HTTP/1.1″ 404 465 “-” “Mozilla/5.0”

…….

Hello,

Thanks for your question about the user agent issue with Mozilla/5.0. In reviewing the issue, it appears to be more of a coding issue for the website than the servers that are hosting, but that doesn’t mean that we are completely washing our hands on the issue. We cannot state what security measures are being taken at the server level, but there are measures (both active and passive) that are in place to help identify and prevent issues. Security in the code of your website is also necessary to help prevent your site from being hacked. Several discussions on the issue state that the user agent is not really necessary, but they also state that though they have not seen compatibility issues. There are most likely problems with this – I found the discussion concerning user agents in HTTP/2. If you are not familiar with securing your code, then I would recommend you speak with an experienced developer for further advice. Other than that, the same mantra of “keep everything up-to-date and keep back-ups” also applies.

Hello,

I only want to grant access to those who use the NFC-sticker to enter the page.Don’t want anyone to enter by using a direct URL.

How?

As I understand, using the “NFC-sticker” still relies on using a URL. I have never implemented this myself, however, from what I have read, it seems to just be a link that you use.

i want block bots (only bots no real user)

i have access to ip

what code should i use in htaccess ?

If you want to block bots, then you use a Robots.txt file. Click on the link and follow the tutorial to keep the bots off your site.

Hello

how can i block robots by GEO IP ?

If you have access to the IP range for the locations you’re trying to block, you can use the Block IP by range rules listed in this article.

I’ve had one specific hacker make a huge number of login attempts to my WordPress site. The hacker never uses a legit IP address. Instead, the hacker uses forged IP addresses. I have found one thing that is consistent. The hacker uses Firefox version 0.0 running on Win7. What syntax could I use to apply a block to my .htaccess file, just for that one Firefox?

Hello Bobby G.,

Thanks for the question about blocking the old Firefox browser version. This question is pretty common and you can run a search in your favorite search engine for solutions. I found this one from StackOverflow to be the most useful: Block obsolete browsers. The changes would need to be made in the .htaccess file.

If you have any further questions or comments, please let us know.

Regards,

Arnel C.

If you deny an user agent from the .htaccess file. Will that user agent still have access to the redirects from within the .htaccess file?

Hello Lauren,

.htaccess files are read from top to bottom. If you’re putting your re-directs at the top of the file, then anything hitting the file will be re-directed BEFORE being denied access based on the conditions that you have placed in your re-directs/re-writes.

I hope this helps to answer your question, please let us know if you require any further assistance.

Regards,

Arnel C.

Is it possible to dynamically block an IP which is accessing a certain path with .htaccess? I don’t have a wordpress site but I see a lot of hits on /wp-login.php . So I know that all clients looking for that file are bad and I would like to block these IPs via htaccess.

How can I achieve that?

Thank you very much,

Walden.

Hello Walden,

Sorry for the problems with the WordPress issues. We do have a guide for multiple ways to secure your site. Please see Lock down your WordPress Admin login. Dynamically blocking an IP is difficult because the attack can be thousands of addresses that would eventually slow a system that is learning by recording and blocking IP addresses because of the sheer volume it would have to review each time someone accesses your site. The guide we provide gives you multiple solutions to locking down your site that should help with your login issue. Also, remember that a lot of the traffic may simply be robots indexing files for search engines. You can use the robots.txt file to keep robots from hitting your login file.

I hope that helps to answer your questions! If you require further assistance, please let us know!

Regards,

Arnel C.

Hello Walden,

Sorry for the problem with your WordPress login. Dynamically blocking an IP would be difficult at best and possibly cause performance issues with the site. Please use our tutorial for locking down your WordPress site. Also, please understand that a lot of those hits could also be legitimate traffic from search engine robots simply indexing your site. If you want to limit that traffic, see How to stop search engine robots.

If you have any further questions, please let us know.

Kindest regards,

Arnel C.

Hello Walden,

Sorry for the problem with your WordPress login. It’s odd, because you say that you don’t have a WordPress site. Why do you have the wp-login.php file? You can still use our guide to block people from hitting the login, though it’s not really a working login. Blocking access to that file would be difficult at best, but if you’re not using it, then you should remove it or rename it if you’re worried about it being consistently targeted.

If you have any further questions, please let us know.

Kindest regards,

Arnel C.

You have got syntax errors in your code examples! For example, you are missing a [NC]:

RewriteEngine On RewriteCond %{HTTP_REFERER} example\.com [NC,OR] RewriteCond %{HTTP_REFERER} example\.net RewriteRule .* - [F]should be

RewriteEngine On RewriteCond %{HTTP_REFERER} example\.com [NC,OR] RewriteCond %{HTTP_REFERER} example\.net [NC] RewriteRule .* - [F]John, I’m not seeing anything in the Apache documentation for the RewriteCond Directive that indicates the omission you mentioned as a syntax error. However, this is still a good addition, and I have updated the article to include your suggested edit.

Simon, give your htaccess a try with a country IP list for russia. You should be able to find one on the web that you can copy and paste into your htaccess file. Worked great for us but I will caution you not to get too carried away with blocking every country IP list that comes along as it can make your htaccess file tremendously heavy, which, in turn, can be an extra load on your resources. There seems to be a good bit of traffic coming from Germany, Netherlands, and Estonia as of late so it might not hurt to watch your raw access files for those also. Good luck!

Scott, thank you very much for your input, and you’re very correct that blocking by an entire country is typically too heavy handed to be worth the performance impact that it will take on your site.

yep, tried robots.txt and htaccess but still 24% of my traffic comes from Russian despite my site being an Australian window cleaning site!

Since it is originating from a specific region, you can try to Block a country from your site using htaccess.

Thank you,

John-Paul

Is there a list of bots to be blocked? I need to block all bots but google. My competitors appear to have attacked by backlinks which have gone down from 3500 to 2400 in 2 weeks.

There are some examples in the “Block multiple bad User-Agents” above, but it really depends on the specific bots that are accessing your site. For example, a hacker could just change the name of the bot.

Thank you,

John-Paul

Thanks Arn.

Will look into, but really need to try using htaccess.

Cheers!

Very informative article, thank you for sharing!

I have little different issue and having hard time obtaining correct info on how to solve. Maybe you can lead me in right direction.

Building small desktop apps that run from portable server. They are for use in classroom and need little security to prevent kids from opening in browser, only access pages using desktop app software.

I can set the user-agent to my liking in app and for this instance “chromesoft”.

What I need to allow is only allow access to PHP pages from inside desktop app.

Have tried many methods with htaccess but nothing seems to work. Told this should, but does not:

RewriteEngine on

RewriteCond %{HTTP_USER_AGENT} !=chromesoft

RewriteRule .* – [F,L]

Any input would be greatly apprecaited.

Hello Woody,

Apologies for not being able to provide code for you that might solve the problem. You may want to look at mod sec rules (ModSecurity Core Rule Set (CRS)) to see if that might meet your needs to restrict access in the app. Hopefully, that will provide a direction that you may want to pursue to resolve the issue you’re facing.

If you have any further questions or comments, please let us know.

Regards,

Arnel C.

hi

my website is WWW.*******.com

few months back my wordpress site was infected and a file ap1.php was put in the root and there were thousands of hit on that file from different ip adresses from all over world. so i removed all the files and reseted my hosting account. now the problem is that there are still thousands of hits to this file everyday ,although it shows document not found but it is eating up the bandwidth of my site. Is there anything I can do to stop this as the resources to my website is being limited by my hosting provide. a sample of the address is given below

all the hits are to this link with the change in string after ap1.php and with different ip’s

Hello Ayush,

Have you tried to block by the user agent?

Kindest Regards,

Scott M

Okay here is one I hope can be addressed.

So I have beengetting high resource usage emails and when I look at them, even though the IP seems to change from the attacker, they always seem to be using an agent that is just listed like this:

“-“

Is there a proper way to write the .htaccess to block this “unknown agent” entirely or would that end up blocking everyone since it’s just a “-” and nothing else? How would the htaccess file be written to block such unknown/blank agents?

Hello Faelandaea,

Sorry for the problems with the high resource usage emails and the unknown user agent. We do have an article that teaches you how to look in the access logs to identify and block robots in the .htaccess. Check out the article here.

If you repeatedly getting a reference using only the “-“, try referencing it using one of the options in the article above. Otherwise, look through your log files to see if you can get a more specific reference for that agent.

If you continue to have resource issues, I highly suggest that you submit a verified support ticket asking for details on what’s causing the resource issues. They can specify that for you.

If you have any further questions or comments, please let us know.

Regards,

Arnel C.

can you add some rule in your temporary bots blocking to whitelist the good search engines or base urls in general?

Hello chris,

Thank you for contacting us. Yes, it is possible to code something like you suggest.

But, typically you would just block the unwanted bots, such as described in theblock by user section above.

To whitelist the good search engines, you would have to block all traffic first, then allow the traffic you want to access your site.

Thank you,

John-Paul

I want to stop all bots please. Is this the code I would use in my .htaccess file?

`RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(AhrefsBot|Baiduspider|bingbot|Ezooms|Googlebot|HTTrack|MJ12bot|Yahoo! Slurp|Yandex).*$ [NC]

RewriteRule .* – [F,L]`

Hello Marcus,

Give it a try and see if it works that way. You can also simply list the bots separately. Check the RewriteRule as well, it may work in its current state, or you can adjust it to look like the sample code below:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^WWWOFFLE [OR]

RewriteCond %{HTTP_USER_AGENT} ^Xaldon\ WebSpider [OR]

RewriteCond %{HTTP_USER_AGENT} ^Zeus

RewriteRule ^.* – [F,L]

Kindest Regards,

Scott M

Try this:

deny from nyc.res.rr.com

Hi

Is it possible to block access by using the host comapany routing server ?i.e cpe-66-108-10-152.nyc.res.rr.com

If so – what would be the syntax?

Regards

Steve

Hello Steve,

Unfortunately that is not a common way to users so it has never been implemented. I checked that IP address and it seems to be from Appalachian state university. Who are you trying to block specifically?

Best Regards,

TJ Edens

Disregard that last post – it DOES work. I initially only checked the IP addresses that were accessing my size through AWSTATS alone. After checking the ERROR LOGS, I see that even though the Baidu spider was accessing my size, it received an error message “client denied by server configuration”. This is indeed what I wanted to see.

Your suggestion regarding how to block a Baiduspider doesnt work. They still hit daily:

RewriteEngine On RewriteCond %{HTTP_USER_AGENT} Baiduspider [NC] RewriteRule .* - [F,L]prestashop has a big problem with their robot.txt file they have functionality through backoffice to generate robot.txt file however it would be better if the generated file would exclude products added to cart and site visitors. currently i have 6-10 carts being generated weekly which is annoying as i have to delete them manually.

wit visitor stats its totally incorrect as my site does not have actual visitors at 2am in the morning. hope this can be resolved without me physically amending the table.

Jay

Hello Jay,

Robots.txt file is primarily used to stop search engine robots from hitting sites or certain portions of sites. It’s not made to stop someone spamming your site. You may want to check their plugins for other ways to manage your carts (there are several that make managing carts much easier), or you may want to use the article above to stop certain IP addresses from accessing your site if you can isolate the culprits.

I hope that helps to answer your question! If you require further assistance, please let us know!

Regards,

Arnel C.

Thanks for this, Htacess is all new to me and this code thing, I was still unclear what I need to do code wise as to which one to Best choose for me and my scenario and likely I need to past a few:

please kindly give me the exact code to paste in to stop this daily ocuurance of page views for my entire site driving me mad false impressions of so called real person visitor: the biggest offender is:

Websitewelcome.com

Current Ip being used is

192.254.189.18

(though likley to change as well know!)

****and also prolific abuser is:

Provider: Hetzner Online AG

IP: 88.198.48.46

can I permenantly block these two companies above?

And for future problems sometimes these spam bots have an actual url other times their so called user name/provider and IP only can be seeen (not url)

hence not sure of exact code to include multiple spam bots to cover this?

My concern is they will simpy change their ip so please gives me a code to maybe add further ip’s for them or under their user name

and perhaps code for multiple spam bots if maybe you know their URL and name

In short:

I would like to include the best typical code scenarios to incorporate so as to likley cover other multiple bots names, or URL or IP’s I can simply add new offenders to the appropriate code ?

as this another one amongst about five to seven regulars or so I get in any given day or week:

IP-Adresse: 188.143.232.14

Provider: Petersburg Internet Network ltd.

Organisation: Petersburg Internet Network LLC

many thanks indeed!

Jenny

Hi, I notice that using the file manager in my cpanel, I can see 2 .htaccess files. One in the public_html folder and one in the level above it. I see that the IP’s i have blocked are added into the public_html but what is the other file in the level above it used for?

Hello John,

That .htaccess is generally more for setting PHP versions that you would like your account to use. You can also block IP addresses at that level as well as it would server the same purpose.

Best Regards,

TJ Edens

Very well described. Thank you. However, I would like to know if I inserted the code in the correct place in .htaccess in WP site. Please see below where I inserted this code:

RewriteRule . /index.php [L]

# END WordPress

RewriteEngine On

RewriteCond %{HTTP_REFERER} 4webmasters\.org [NC]

RewriteRule .* – [F]

Redirect 301 / www……….

I have 301s after #End WordPress. Should the code go after #END WordPress?

Thank you.

How do I find out which IPs to block?

Hello Karen,

You can use the Raw Access logs in the cPanel. You can also submit a support ticket requesting information on IP addresses that have hit you r website(s).

I hope this helps to answer your question, please let us know if you require any further assistance.

Regards,

Arnel C.

inmotionhosting support needs to provide a full sample .htaccess file instead of just snippets for a specific action like blocking a bad referer and temporarily blocking a robot. As far as I can tell by reading the Apache documentation on the rewrite and setenvif modules, if a user puts both of the following blocks into a .htaccess file the second block is not going to be processed.

If the first of the following blocks appears before the other, because of the L in the rewrite option, that will be the last rewrite rule processed. If the second of the following blocks is placed before the other in the .htaccess file, because the F option implies L in the rewrite rule, no other rewrite rule that follows is going to be processed.

A complete sample .htaccess file that includes

where users could just change specific domain names, ip addresses, file names, and extensions, I think, would be helpful and would save users time in researching the .htaccess and Apache directives.

ErrorDocument 503 "Site temporarily disabled for crawling" RewriteEngine On RewriteCond %{HTTP_USER_AGENT} ^.*(bot|crawl|spider).*$ [NC] RewriteCond %{REQUEST_URI} !^/robots\.txt$ RewriteRule .* - [R=503,L]RewriteEngine On RewriteCond %{HTTP_REFERER} example\.com [NC,OR] RewriteCond %{HTTP_REFERER} example\.net RewriteRule .* - [F]thanks working for my site

I would like to know if this affects subdomains and add-on domains. For example, I have an account with two domains, and 5 subdomains, all within the /home/username/ folder. Will modifying the .htaccess file in that root directory take care of everything in the subdirectories?

pls i am having issues with resources overage and i have done all i was asked to do , starting from putting in place a good captcha , deleting messages in my commentmeta which are spamms , i have also set my web master tool to a 30 secs delay and still it giving me the same issue , im loosing clients due to this coz they are scared of my site been suspended . i was told if i block some certain bots i wont be listed on google / bings site . how can you guys help resolve this without going for another plan (vps) . and please im based in nigeria , i really need ur help ..

Hello albert,

Thank you for contacting us. We are happy to help, but it is difficult since we will need to review the specific nature of the traffic. If you received an email notification or suspension from our System Admin team, just reply to the email and request more information, or a review. This will reopen the ticket with our System Admin team.

The above guide guide explains how to block bots, and IP’s but I do not recommend blocking anything that you need. For example, if your users are in America, I would not block Google.

If you do not have any visitors from China, then I would block the Baidu bot from crawling your website, since it is for a chinese based search engine.

If you have any further questions, feel free to post them below.

Thank you,

John-Paul

when I try to use .htaccess it keeps turning on my hot-linking protection.

with the result that when I click on google images etc and click on an image to visit the site I get the page with no graphics on it.

You have told me this cannot happen – BUT IT IS HAPPENING

If I go to C panel and remove the htaccess file and then go back to the page and refresh it the normal page is then shown.

I have tried adding the Ph whatsit fix that you have up but that makes absolutely no difference.

My domain is https://searchpartygraphics.com

Hello Vicky,

I’m sorry that you appear to be having problems with hotlinking. If you had previously activated hotlinking and Google indexed your site, then it’s possible that that missing images are are due to the previous images being blocked. Google would need to re-index your page without the hotlinking protection. By default, the .htaccess file is always there and it will only have the hotlinking settings if the option is set. When I access your page with hotlinking off, I don’t see any problems – and there is an .htaccess file active.

I also looked up your site in Google Images, and it looks normal to me there too. Make sure that you’re clearing your browser cache. If you had accessed Google images earlier and it got cached in your browser without the images, then you may be seeing it that way.

If you continue to have problems after clearing the browser cache, then please explain the URL that you’re viewing and exactly what you’re seeing and what you expect to see. We can then investigate the issue further for you.

Regards,

Arnel C.

I have the same question, but I can’t parse my raw access logs per your instructions because I’m on a shared server. How can I do this on a shared server.

BTW, I did follow your instructions for adding a second password and optimizing my wordpress. Thanks.

On shared hosting, the access logs are still able to be obtained via cPanel under the Logs section.

How can I identify bad user IP addresses?

Hello Jeremy, and thank you for your comment.

I see that your account has been having some higher than normal CPU usage which you can see by looking at your CPU graphs in cPanel.

One of the best ways to get a good idea of bad IP addresses or other malicious users to block is to parse archived raw access logs. These archived raw access logs in cPanel allow you to see requests coming from the same malicious users over an extended period of time.

By default your raw access logs are processed into stat reports, and then rotated, so the raw information is no longer available. I went ahead and enabled the raw access log archiving for you, so that going forward you’ll have a better understand of what is happening on your site.

Currently your raw access logs go back to 05/May/2014:09:02:05 and it looks like since then, these are the issues causing your higher CPU usage.

You should really optimize WordPress for your sites. Making sure to use a WordPress cache plugin to speed up your site. You should also disable the wp-cron.php default behavior in WordPress to cut down on unessary requests.

You can also review WordPress login attempts as it looks like there have been 120 different IP addresses hitting your wp-login.php script over 218 times in the available logs. This is common due to WordPress brute force attacks being a very big nuisance this year. In these cases it’s generally recommended to setup a secondary WordPress password instead of keep going back and blocking IPs that have already attacked you.

I’d recommend checking back in a few days after implementing some of these WordPress solutions to see if your CPU usage has dropped back down to normal usage levels.

– Jacob